The Open-Source Powerhouse Rivaling Proprietary Giants: Qwen3-Coder-480B-A35B-Instruct

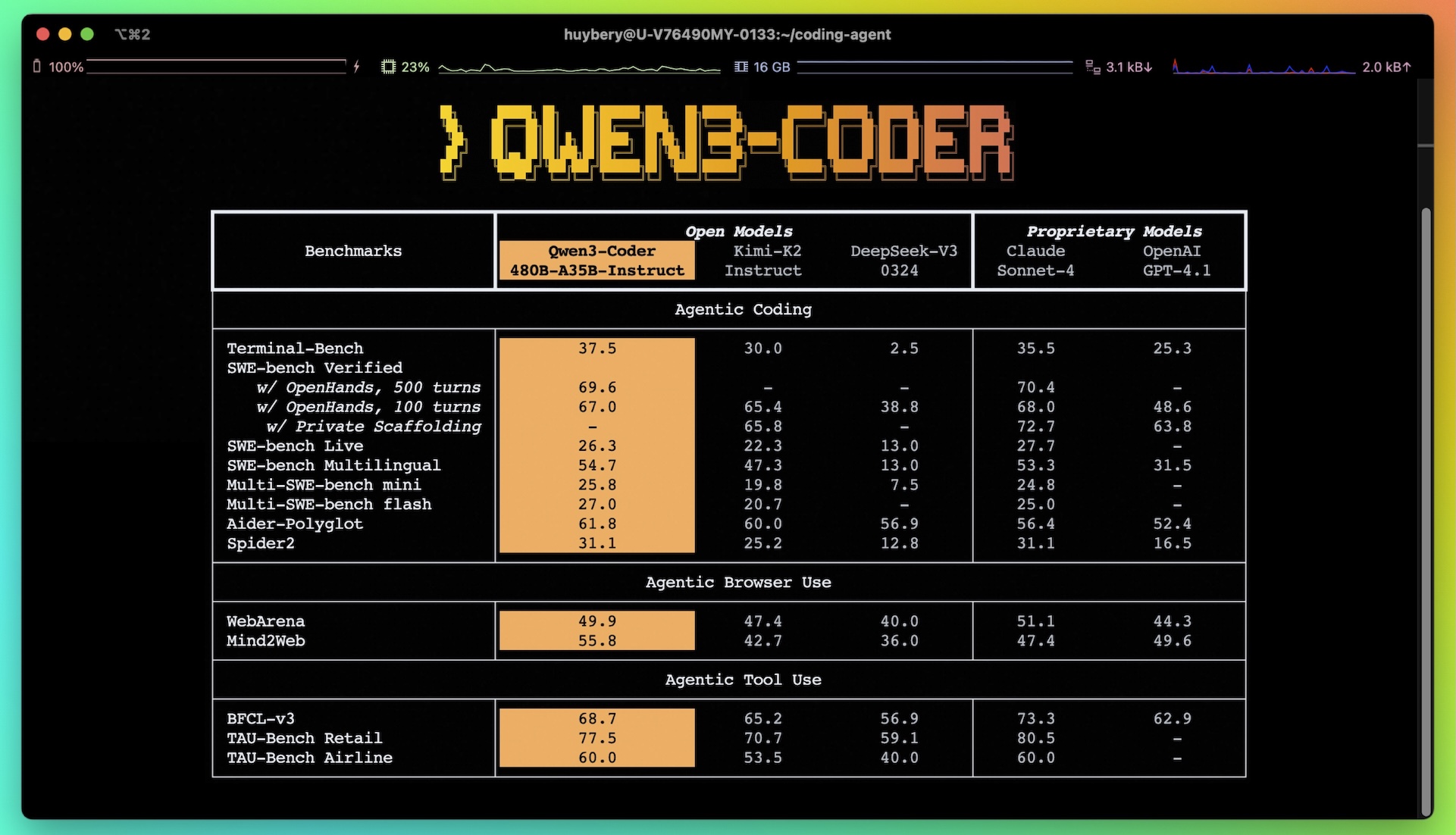

In the rapidly evolving landscape of AI-powered coding assistants, Qwen3-Coder-480B-A35B-Instruct emerges as a groundbreaking open-source model that challenges proprietary giants like Claude 4 and OpenAI CodeX. Developed by the Qwen team, this model is not only free to use but also offers performance on par with its expensive counterparts, making it a game-changer for developers and businesses alike.

Key Features

1. Performance Comparable to Claude Sonnet

Qwen3-Coder-480B-A35B-Instruct delivers exceptional performance on agentic coding tasks, matching the capabilities of Claude Sonnet. This makes it a viable alternative for developers seeking high-quality coding assistance without the hefty price tag.

2. Long-Context Capabilities

With native support for 256K tokens and extendable up to 1M tokens using Yarn, this model is optimized for repository-scale understanding. This feature is particularly useful for large codebases and complex projects.

3. Agentic Coding Support

The model excels in tool-calling capabilities, supporting platforms like Qwen Code and CLINE. It features a specially designed function call format, enabling seamless integration into existing workflows.

4. MoE Architecture

Qwen3-Coder-480B-A35B-Instruct employs a Mixture of Experts (MoE) architecture with 480B total parameters and 35B activated. This design ensures efficient resource utilization while maintaining high performance.

Advantages

1. Open-Source and Free

Unlike proprietary models that come with high costs and usage restrictions, Qwen3-Coder is open-source and free to run locally or integrate into your business. This democratizes access to advanced AI coding tools.

2. Flexible Deployment Options

The model can be deployed on various hardware configurations, from high-end GPUs to more modest setups with RAM offloading. Quantized versions (e.g., 2-bit to 8-bit) are available to optimize performance and resource usage.

3. Community and Ecosystem

With active community support and integrations with tools like Ollama, LMStudio, and llama.cpp, Qwen3-Coder is backed by a robust ecosystem that ensures continuous improvement and ease of use.

Options and Use Cases

1. Local Deployment

For developers preferring local execution, Qwen3-Coder can be run on systems with as little as 24GB VRAM and 128GB RAM (for quantized versions). This makes it accessible for individual developers and small teams.

2. Cloud and Enterprise Solutions

Larger teams can leverage cloud deployments or enterprise solutions, benefiting from the model's scalability and performance.

3. Integration with Existing Tools

The model supports OpenAI-compatible APIs, making it easy to integrate with existing tools and workflows. This flexibility ensures a smooth transition for teams already using other AI coding assistants.

Further Reading and References

- Hugging Face Model Page

- Qwen3-Coder Blog

- GitHub Repository

Conclusion

Qwen3-Coder-480B-A35B-Instruct stands as a testament to the power of open-source innovation. By offering performance comparable to proprietary models while remaining free and accessible, it empowers developers and businesses to harness advanced AI coding tools without financial or operational constraints. Whether you're an individual developer or part of a large team, Qwen3-Coder is a compelling choice for your coding needs.

China | Technology, AI, LLM, China, QWEN | | slashnews.co.uk